Difference between revisions of "Data Validation"

(→Duplicates Validation) |

(→Duplicates Validation) |

||

| Line 23: | Line 23: | ||

<p>Part of the validation process is the consolidation of a dataset. Consolidation refers to ensuring any duplicate series are 'rolled up' into a single series. This process is important for data formats such as SDMX-EDI, where the series and observation attributes are reported at the end of a dataset, after all the observation values have been reported. </p> | <p>Part of the validation process is the consolidation of a dataset. Consolidation refers to ensuring any duplicate series are 'rolled up' into a single series. This process is important for data formats such as SDMX-EDI, where the series and observation attributes are reported at the end of a dataset, after all the observation values have been reported. </p> | ||

| − | <p>Example | + | <p>'''Example''': Input Dataset Unconsolidated</p> |

{| class="wikitable" | {| class="wikitable" | ||

|- | |- | ||

| Line 47: | Line 47: | ||

<p>The above consolidation process does not report the duplicate as an error, as the duplicate is not reporting contradictory information, it is supplying extra information. If the dataset were to contain two series with contradictory observation values, or attributes, then this would be reported as a duplication error</p> | <p>The above consolidation process does not report the duplicate as an error, as the duplicate is not reporting contradictory information, it is supplying extra information. If the dataset were to contain two series with contradictory observation values, or attributes, then this would be reported as a duplication error</p> | ||

| − | <p>Example | + | <p>'''Example''': Duplicate error for the observation value reported for 2009</p> |

{| class="wikitable" | {| class="wikitable" | ||

|- | |- | ||

Revision as of 05:19, 10 February 2020

Overview

The Fusion Registry is able to validate datasets for which there is a Dataflow present in the Registry.

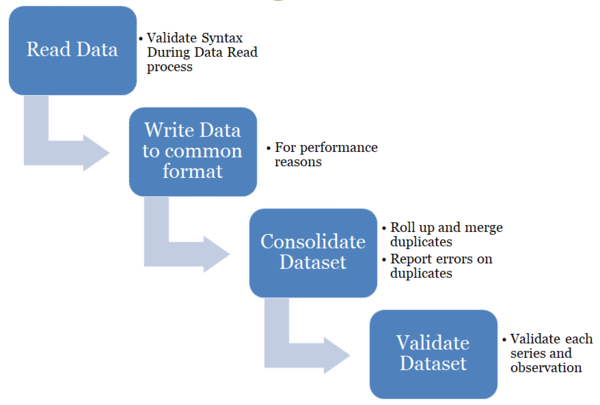

Data Validation is split into 3 high level validation process:

- Syntax Validation - is the syntax of the dataset correct

- Duplicates - format agnostic process of rolling up duplicate series and obs

- Syntax Agnostic Validation - does the dataset contain the correct content

Data Validation can either be performed via the web User Interface of the Fusion Registry, or by POSTing data directly to the Fusion Registries' data validation web service.

Syntax Validation

Syntax Validation refers to validaiton of the reported dataset in terms of the file syntax. If the dataset is in SDMX-ML then this will ensure the XML is formatted correctly, and the XML Elements and XML Attributes are as expected. If the dataset is in Excel Format (propriatory to the Fusion Registry) then these checks will ensure the data complies with the expected Excel format.

Duplicates Validation

Part of the validation process is the consolidation of a dataset. Consolidation refers to ensuring any duplicate series are 'rolled up' into a single series. This process is important for data formats such as SDMX-EDI, where the series and observation attributes are reported at the end of a dataset, after all the observation values have been reported.

Example: Input Dataset Unconsolidated

| Frequency | Reference Area | Indicator | Time | Observation Value | Observation Note |

|---|---|---|---|---|---|

| A | UK | IND_1 | 2009 | 12.2 | - |

| A | UK | IND_1 | 2010 | 13.2 | - |

| A | UK | IND_1 | 2009 | - | A Note |

After Consolidation:

| Frequency | Reference Area | Indicator | Time | Observation Value | Observation Note |

|---|---|---|---|---|---|

| A | UK | IND_1 | 2009 | 12.2 | A Note |

| A | UK | IND_1 | 2010 | 13.2 | - |

The above consolidation process does not report the duplicate as an error, as the duplicate is not reporting contradictory information, it is supplying extra information. If the dataset were to contain two series with contradictory observation values, or attributes, then this would be reported as a duplication error

Example: Duplicate error for the observation value reported for 2009

| Frequency | Reference Area | Indicator | Time | Observation Value | Observation Note |

|---|---|---|---|---|---|

| A | UK | IND_1 | 2009 | 12.2 | - |

| A | UK | IND_1 | 2010 | 13.2 | - |

| A | UK | IND_1 | 2009 | 12.3 | A Note |

Security

Data Validation is by default a public service and as such a user can perform data validation with no authentication required. It is possible to change the security level in the Registry to either:

- Require that a user is authenticated before they can perform ANY data validation

- Require that a user is authenticated before they can perform data validation on a dataset obtained from a URL