Difference between revisions of "Data Validation"

(→Performance) |

(→Overview) |

||

| (23 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | [[Category: | + | [[Category:Concepts_Reference_V10]] |

| + | [[Category:Concepts_Reference_V11]] | ||

= Overview = | = Overview = | ||

| − | <p> | + | <p><strong>Data Validation</strong> checks principally whether a dataset conforms to the Data Structure Definition and complies with any specified Content Constraints.</p> |

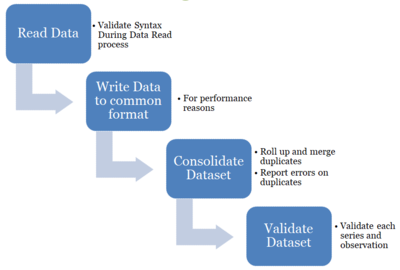

| − | <p> | + | <p>In Fusion Metadata Registry, data validation is a three-step process:</p> |

<ol> | <ol> | ||

<li><strong>Syntax Validation</strong> - is the syntax of the dataset correct</li> | <li><strong>Syntax Validation</strong> - is the syntax of the dataset correct</li> | ||

| Line 11: | Line 12: | ||

</ol> | </ol> | ||

| − | [[File:Data-validation-process.png| | + | [[File:Data-validation-process.png|400px]] |

<p> | <p> | ||

| − | <p>Data Validation can either be performed via the web User Interface of the | + | <p>Data Validation can either be performed via the web User Interface of the Registry, or by POSTing data directly to the Registries' [[Data_Validation_Web_Service|data validation web service]].</p> |

= Syntax Validation = | = Syntax Validation = | ||

| − | Syntax Validation refers to | + | Syntax Validation refers to validation of the reported dataset in terms of the file syntax. If the dataset is in SDMX-ML then this will ensure the XML is formatted correctly, and the XML Elements and XML Attributes are as expected. If the dataset is in Excel Format (proprietary to the Fusion Metadata Registry) then these checks will ensure the data complies with the expected Excel format. |

= Duplicates Validation = | = Duplicates Validation = | ||

| Line 61: | Line 62: | ||

= Syntax Agnostic Validation = | = Syntax Agnostic Validation = | ||

<p> | <p> | ||

| − | Syntax Agnostic Validation is where | + | Syntax Agnostic Validation is where most of the data validation process happens. Like the name suggests, the validation is syntax agnostic, and therefore the same validation rules and processes are applied to all datasets, regardless of the format the data was uploaded in.</p> |

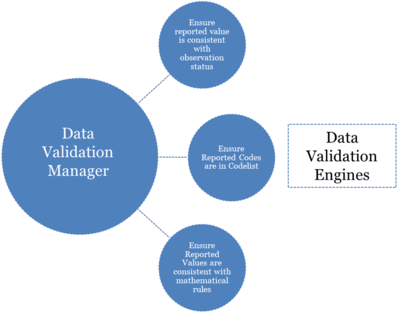

| − | <p>This validation process makes use of a single '''Validation Manager''' and multiple '''Validation Engines'''. The validation manager walks the contents of the dataset (Series and Observations) in a streaming | + | <p>This validation process makes use of a single '''Validation Manager''' and multiple '''Validation Engines'''. The validation manager walks the contents of the dataset (Series and Observations) in a streaming fashion, and as each new Series or Observation is read in, it asks the same question to each registered Validation Engine - the question is ''"is this valid?"''.</p> |

<p>An conceptual example of the Validation Manager delegating validation questions to each Validation Engine in turn</p> | <p>An conceptual example of the Validation Manager delegating validation questions to each Validation Engine in turn</p> | ||

| − | [[File:Data-validation-engine.png| | + | [[File:Data-validation-engine.png|400px]] |

| − | <p>The purpose of a Validation Engine is to perform ONE type of validation, this allows configuration of each validation engine as a | + | <p>The purpose of a Validation Engine is to perform ONE type of validation, this allows configuration of each validation engine as a separate entity, and new validation engines can be easily added to the product if there is a new type of validation rule to implement. Validation Engines can be switched off, or have a different level of error reporting set, validation engines can also have a error limit set, so that a single engine can be decommissioned from validating a particular dataset if it is reporting too many errors. In the validation report that is produced, the errors are grouped per validation engine.</p> |

<p>The following table shows each validation engine and its purpose</p> | <p>The following table shows each validation engine and its purpose</p> | ||

| Line 99: | Line 100: | ||

|} | |} | ||

| − | = | + | = Validation with Transformation = |

| − | + | Fusion Registry supports a validation process, which combines both data validation with data transformation. The output can just be the valid dataset (with invalid observations removed) or both the valid dataset, and invalid dataset. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | See the [[Data_Validation_Web_Service|Data Validation Web Service]] for details on how to achieve this. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

= Performance = | = Performance = | ||

| − | <p>The data format has some impact on performance time, as the time taken to perform the initial data read and syntax specific validation rules are format specific. After the initial checks are performed, an intermediary data format is used to perform consolidation and syntax | + | <p>The data format has some impact on performance time, as the time taken to perform the initial data read and syntax specific validation rules are format specific. After the initial checks are performed, an intermediary data format is used to perform consolidation and syntax agnostic checks. Therefore the performance of the data consolidation stage and syntax agnostic data validation is the same regardless of import format.</p> |

<p>Considerations to take to optimise performance is</p> | <p>Considerations to take to optimise performance is</p> | ||

| Line 355: | Line 113: | ||

<li>In the case the dataset is coming from a URL support gzip response<li> | <li>In the case the dataset is coming from a URL support gzip response<li> | ||

<li>Use a fast hard drive to optimise I/O as temporary files will be used in the case of validating large datasets</li> | <li>Use a fast hard drive to optimise I/O as temporary files will be used in the case of validating large datasets</li> | ||

| − | <li>Performance is | + | <li>Performance is dependent on CPU speed</li> |

</ul> | </ul> | ||

| − | <p>When the server | + | <p>When the server receives a zip file, there is some overhead in unzipping the file, but this overhead is very small compare to the performance gains in network transfer times.</p> |

<p>Tests have been carried out on a dataset with the following properties</p> | <p>Tests have been carried out on a dataset with the following properties</p> | ||

| Line 375: | Line 133: | ||

<p>The following table shows the file size, and validation duration for each data format</p> | <p>The following table shows the file size, and validation duration for each data format</p> | ||

| − | + | ||

| − | + | {| class="wikitable sortable" | |

| − | {| class="wikitable" | ||

|- | |- | ||

| − | ! Data Format !! File | + | ! Data Format !! File Size <br/>Uncompressed!! File Size <br/>Zipped !! Syntax Validation <br/>(seconds) !! Data Consolidation<br/>(seconds) !! Validate Dataset<br/>(seconds) !! Total Duration<br/>(seconds) |

|- | |- | ||

| SDMX Generic 2.1 || 3.6Gb || 94Mb || 165 || 37 || 57 || 312 | | SDMX Generic 2.1 || 3.6Gb || 94Mb || 165 || 37 || 57 || 312 | ||

| Line 391: | Line 148: | ||

| SDMX EDI || 153Mb || 71Mb || 68 || 36 || 58 || 230 | | SDMX EDI || 153Mb || 71Mb || 68 || 36 || 58 || 230 | ||

|} | |} | ||

| + | <p><b>Note 1:</b> All times are rounded to the nearest second</p> | ||

| + | <p><b>Note 2:</b> Total duration includes other processes which are not included in this table</p> | ||

| + | |||

| + | = Web Service = | ||

| + | As an alternative to the Fusion Registry web User Interface [[Data_Validation_Web_Service|data validation web service]] can be used to validate data from external tools or processes. | ||

= Security = | = Security = | ||

Latest revision as of 04:53, 28 March 2024

Contents

Overview

Data Validation checks principally whether a dataset conforms to the Data Structure Definition and complies with any specified Content Constraints.

In Fusion Metadata Registry, data validation is a three-step process:

- Syntax Validation - is the syntax of the dataset correct

- Duplicates - format agnostic process of rolling up duplicate series and obs

- Syntax Agnostic Validation - does the dataset contain the correct content

Data Validation can either be performed via the web User Interface of the Registry, or by POSTing data directly to the Registries' data validation web service.

Syntax Validation

Syntax Validation refers to validation of the reported dataset in terms of the file syntax. If the dataset is in SDMX-ML then this will ensure the XML is formatted correctly, and the XML Elements and XML Attributes are as expected. If the dataset is in Excel Format (proprietary to the Fusion Metadata Registry) then these checks will ensure the data complies with the expected Excel format.

Duplicates Validation

Part of the validation process is the consolidation of a dataset. Consolidation refers to ensuring any duplicate series are 'rolled up' into a single series. This process is important for data formats such as SDMX-EDI, where the series and observation attributes are reported at the end of a dataset, after all the observation values have been reported.

Example: Input Dataset Unconsolidated

| Frequency | Reference Area | Indicator | Time | Observation Value | Observation Note |

|---|---|---|---|---|---|

| A | UK | IND_1 | 2009 | 12.2 | - |

| A | UK | IND_1 | 2010 | 13.2 | - |

| A | UK | IND_1 | 2009 | - | A Note |

After Consolidation:

| Frequency | Reference Area | Indicator | Time | Observation Value | Observation Note |

|---|---|---|---|---|---|

| A | UK | IND_1 | 2009 | 12.2 | A Note |

| A | UK | IND_1 | 2010 | 13.2 | - |

The above consolidation process does not report the duplicate as an error, as the duplicate is not reporting contradictory information, it is supplying extra information. If the dataset were to contain two series with contradictory observation values, or attributes, then this would be reported as a duplication error

Example: Duplicate error for the observation value reported for 2009

| Frequency | Reference Area | Indicator | Time | Observation Value | Observation Note |

|---|---|---|---|---|---|

| A | UK | IND_1 | 2009 | 12.2 | - |

| A | UK | IND_1 | 2010 | 13.2 | - |

| A | UK | IND_1 | 2009 | 12.3 | A Note |

Syntax Agnostic Validation

Syntax Agnostic Validation is where most of the data validation process happens. Like the name suggests, the validation is syntax agnostic, and therefore the same validation rules and processes are applied to all datasets, regardless of the format the data was uploaded in.

This validation process makes use of a single Validation Manager and multiple Validation Engines. The validation manager walks the contents of the dataset (Series and Observations) in a streaming fashion, and as each new Series or Observation is read in, it asks the same question to each registered Validation Engine - the question is "is this valid?".

An conceptual example of the Validation Manager delegating validation questions to each Validation Engine in turn

The purpose of a Validation Engine is to perform ONE type of validation, this allows configuration of each validation engine as a separate entity, and new validation engines can be easily added to the product if there is a new type of validation rule to implement. Validation Engines can be switched off, or have a different level of error reporting set, validation engines can also have a error limit set, so that a single engine can be decommissioned from validating a particular dataset if it is reporting too many errors. In the validation report that is produced, the errors are grouped per validation engine.

The following table shows each validation engine and its purpose

| Validation Type | Validation Description |

|---|---|

| Structure | Ensures the Dataset reports all Dimensions and does not include any additional Dimensions or Attributes |

| Representation | Ensures the reported values for Dimensions, Attributes, and Observation values comply with the DSD |

| Mandatory Attributes | Ensures all Attributes, as defined in the Data Structure Definition (DSD), are reported if they are marked as Mandatory |

| Constraints | Ensures the reported values have not been disallowed due to Content Constraint definitions |

| Mathematical Rules | Performs any mathematical calculations, defined in Validation Schemes, to ensure compliance |

| Frequency Match | Ensure the reported Frequency code matches the reported time period Example: FREQ=A will expect time periods in format YYYY |

| Obs Status Match | Ensure observation values are in keeping with the Observation Status Example: Missing Value, does not expect a value to be reported |

| Missing Time Period | Ensures the Series has no holes in the reported time periods |

Validation with Transformation

Fusion Registry supports a validation process, which combines both data validation with data transformation. The output can just be the valid dataset (with invalid observations removed) or both the valid dataset, and invalid dataset.

See the Data Validation Web Service for details on how to achieve this.

Performance

The data format has some impact on performance time, as the time taken to perform the initial data read and syntax specific validation rules are format specific. After the initial checks are performed, an intermediary data format is used to perform consolidation and syntax agnostic checks. Therefore the performance of the data consolidation stage and syntax agnostic data validation is the same regardless of import format.

Considerations to take to optimise performance is

- To reduce network traffic, upload the data file as a Zip

- In the case the dataset is coming from a URL support gzip response

- Use a fast hard drive to optimise I/O as temporary files will be used in the case of validating large datasets

- Performance is dependent on CPU speed

When the server receives a zip file, there is some overhead in unzipping the file, but this overhead is very small compare to the performance gains in network transfer times.

Tests have been carried out on a dataset with the following properties

| Series | 216,338 |

| Observations | 15,470,893 |

| Dimensions | 15 |

| Datset Attributes | 3 |

| Observation Attributes | 2 |

The following table shows the file size, and validation duration for each data format

| Data Format | File Size Uncompressed |

File Size Zipped |

Syntax Validation (seconds) |

Data Consolidation (seconds) |

Validate Dataset (seconds) |

Total Duration (seconds) |

|---|---|---|---|---|---|---|

| SDMX Generic 2.1 | 3.6Gb | 94Mb | 165 | 37 | 57 | 312 |

| SDMX Compact 2.1 | 1.1Gb | 59Mb | 84 | 37 | 57 | 251 |

| SDMX JSON | 362Mb | 41Mb | 65 | 36 | 58 | 224 |

| SDMX CSV | 1.3Gb | 80Mb | 71 | 36 | 58 | 240 |

| SDMX EDI | 153Mb | 71Mb | 68 | 36 | 58 | 230 |

Note 1: All times are rounded to the nearest second

Note 2: Total duration includes other processes which are not included in this table

Web Service

As an alternative to the Fusion Registry web User Interface data validation web service can be used to validate data from external tools or processes.

Security

Data Validation is by default a public service and as such a user can perform data validation with no authentication required. It is possible to change the security level in the Registry to either:

- Require that a user is authenticated before they can perform ANY data validation

- Require that a user is authenticated before they can perform data validation on a dataset obtained from a URL